Boost Testing Efficiency with Launchable

In this blog, we’ll introduce Launchable, a powerful testing CI tool that can significantly improve the efficiency of our movie recommendation service. Since our team initially used Jenkins CI, we’ll start by discussing its limitations and how Launchable addresses those challenges. Next, we’ll guide you through a hands-on mini tutorial to help you get started with Launchable. Finally, we’ll outline some limitations of Launchable and wrap up the blog with a conclusion.

Struggles with Jenkins CI

During our work on Milestone 2, our team utilized Jenkins CI, but we encountered several challenges that made the experience less than ideal. These difficulties can be attributed to the following factors:

- Self-hosted nature: Since Jenkins CI is self-hosted, whenever our actions triggered a build, we had to log into our separate server to check the build status. This extra step complicated the process, particularly for team members who were not responsible for launching Jenkins CI.

- User-side computational resources: As a self-hosted solution, Jenkins CI demands computational resources from the user. This requirement creates a barrier for users without access to their own server, making it challenging for them to utilize Jenkins CI.

- No real-time build status on GitHub: With Jenkins CI, we could only check the build status on our separate server or by logging into a specific port. The lack of a real-time status indicator on GitHub made it difficult to track the progress of our builds.

- Full test execution on every push: Whenever we pushed code, even for minor changes or isolated features, Jenkins CI would execute the entire test suite. As our repository grew, this became increasingly inefficient and resource-intensive, leading to wasted time and energy.

Embracing Launchable

Frustrated by these limitations, we turned to an alternative tool: Launchable. This platform addressed the challenges we faced with Jenkins CI in the following ways:

- Cloud-hosted: Launchable is cloud-hosted, meaning we can set it up with GitHub actions and run tests on the cloud whenever we push or pull, without needing a separate server.

- No user-side computational resources: Launchable doesn’t require users to have their own server, making it more accessible for those new to CI.

- Real-time build status on GitHub: Once we set up GitHub actions, we could easily view the build status directly on GitHub or in our team’s online workspace, eliminating the need to check a separate server.

- Selective test execution: Launchable automatically selects and runs only the necessary tests based on code changes. This feature becomes increasingly valuable as the codebase grows, ensuring efficient testing and reduced resource consumption.

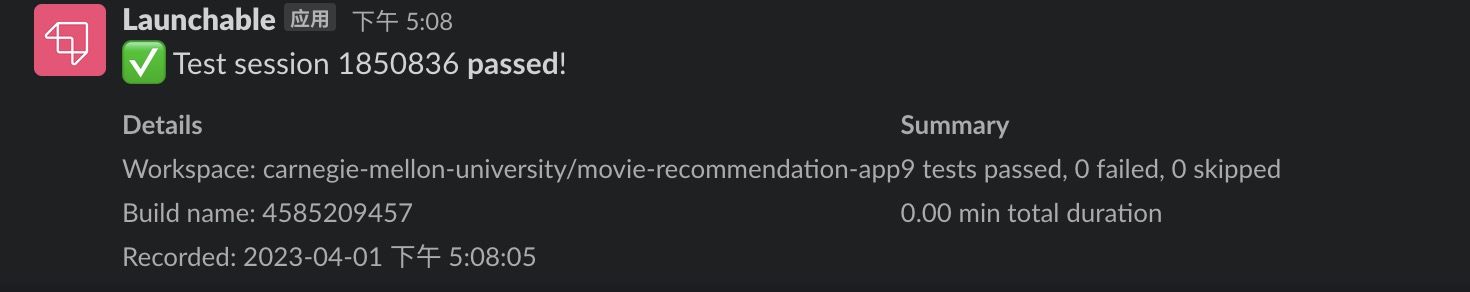

- Slack integration: Launchable can send notifications to a group channel on Slack whenever tests pass or fail, proving especially helpful for teams that rely on Slack for communication.

Mini Tutorial

Ready to get your hands dirty? Follow these steps!

Get Started.

First, you need to sign up for an accound on Launchable

1 | http://launchableinc.com |

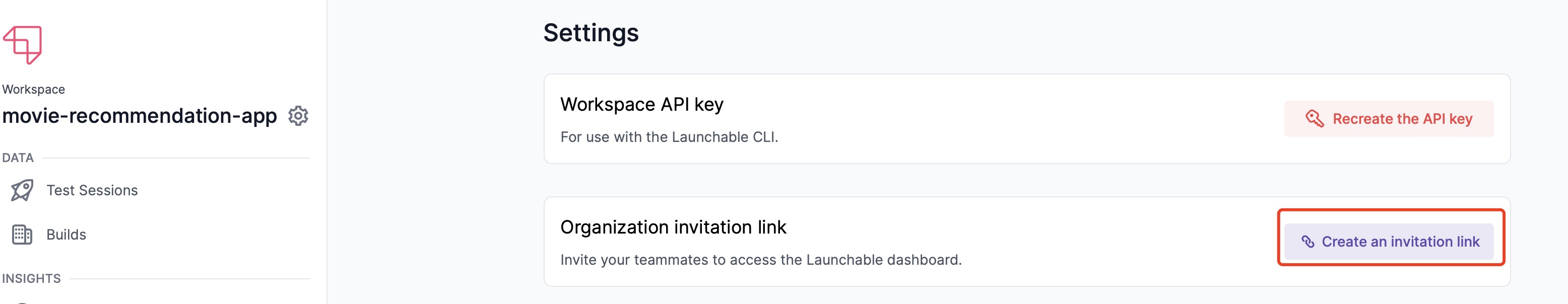

After that, create your orgranization (Carnegie Mellon University) and workspace (Name your app). Each workspace is used to host one application or service. You will be able to invite your teammates in the settings.

Setup Launchable GitHub Action

Create API Key: Go to the setting page of your workspace. Click on Generate the API Key and safely store this API Key.

Create Encrypted Secret Key: Go to your GitHub repo’s settings. Click on the dropdown of Secrets and variables, and click on Actions. Init a New repository secret named LAUNCHABLE_KEYS using the API Key from last step.

Build your own launchable.yaml

With the LAUNCHABLE_KEYS added, we can now create the configuration for Launchable’s action. Our group is using pytest for all our tests, so we’ll provide an example for that. Launchable also supports many popular test frameworks; check out these integrations!

1 | # GitHub action to run pytest and record test results in Launchable |

Introduce Test selection feature

The test selection feature in Launchable uses machine learning to analyze your code changes and intelligently select a subset of tests that are most relevant to those changes. This helps to reduce the time spent running tests, as it allows you to avoid running the entire test suite when a small change is made.

The YAML file below has been updated to include the test selection feature:

1 | name: Test |

Here’s a brief explanation of the new features in the YAML file:

launchable record build --name $GITHUB_RUN_ID: This step records the build information with Launchable, which is required for using the test selection feature. TheGITHUB_RUN_IDis used as the build identifier.launchable subset --build $GITHUB_RUN_ID --target 90% pytest . > subset.txt: This step requests a subset of tests from Launchable, targeting 90% of the test effectiveness. It means that Launchable uses machine learning algorithms to analyze the relationship between your code changes and the tests, and selects a subset of tests that are expected to provide 90% of the test coverage effectiveness while minimizing the number of tests executed. The command saves the list of selected tests to a file calledsubset.txt.

After making changes to the online evaluation code, our configured Launchable will go through a process where it prioritize and select tests according to the percentage you provided. You will see the process below in the CI, where it automatically prioritizes and selected most possible-to-fail tests for the change we just made.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15launchable subset --build $GITHUB_RUN_ID --target 90% pytest . > subset.txt

shell: /usr/bin/bash -e {0}

env:

LAUNCHABLE_TOKEN: ***

LAUNCHABLE_DEBUG: 1

LAUNCHABLE_REPORT_ERROR: 1

Your model is currently in training

Launchable created subset 479830 for build 4585209457 (test session 1850835) in workspace carnegie-mellon-university/movie-recommendation-app

| | Candidates | Estimated duration (%) | Estimated duration (min) |

|-----------|--------------|--------------------------|----------------------------|

| Subset | 9 | 90 | 0.00015 |

| Remainder | 1 | 10 | 1.66667e-05 |

| | | | |

| Total | 10 | 100 | 0.000166667 |pytest --junitxml=test-report.xml $(cat subset.txt): This step runs the pytest command with the selected tests from thesubset.txtfile.

By using the test selection feature in Launchable, you can reduce the time and resources spent on running tests, making your CI process more efficient.

Analyzing Test Reports in Launchable

Launchable provides detailed test reports that help you understand the test results and trends better. To access these reports:

Log in to your Launchable account and navigate to your workspace.

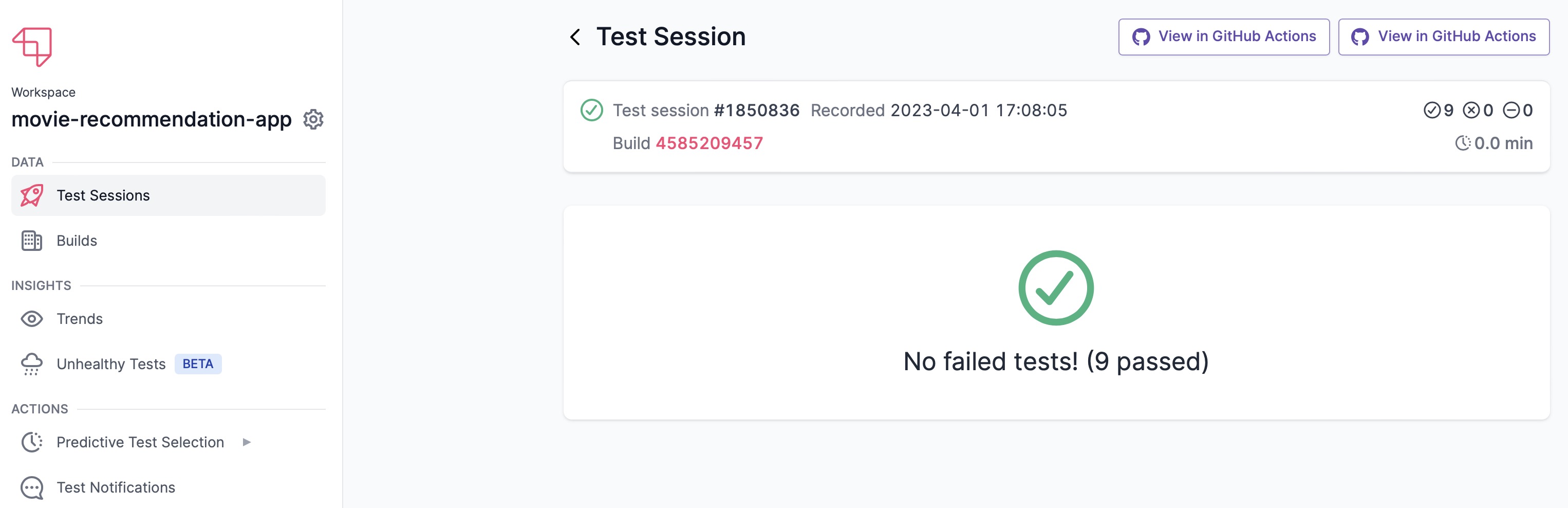

Click on “Test Sessions” in the sidebar to see an overview of all test runs.

Click on a specific test run to view detailed information, such as the pass/fail ratio, test duration, and test output. If all your tests have passed, you will see the session like this

These insights can help your team identify patterns in test failures, monitor test performance, and optimize the testing process.

Enable Slack Notifications

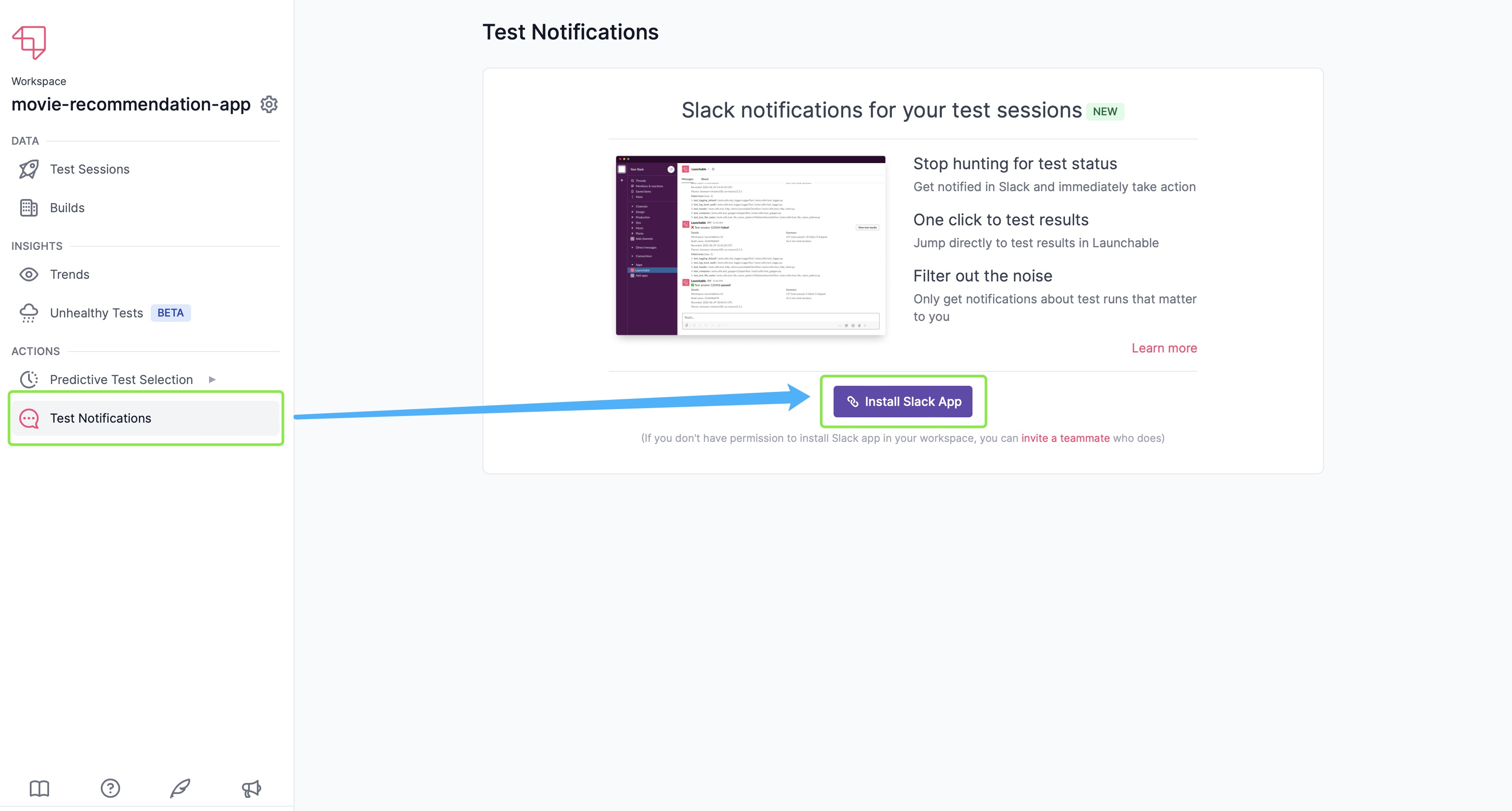

To receive notifications on Slack when tests pass or fail, follow these steps:

Go to your Launchboard Dashboard, and click on

Test Notifications.Click on “Install Slack” and follow the instructions to integrate Launchable into your selected slack workspace.

In your desired Slack channel, type the following command to subscribe to notifications:

1

/launchable subscribe <YOUR_LAUNCHABLE_WORKSPACE> GITHUB_ACTOR=<YOUR_GITHUB_USERNAME>

Replace

<YOUR_LAUNCHABLE_WORKSPACE>with the name of your Launchable workspace, andYOUR_GITHUB_USERNAMEwith your GitHub username.Now, whenever tests pass or fail, you’ll receive real-time updates in your group’s Slack channel. This feature is particularly helpful for teams that rely heavily on Slack for communication.

This feature is particularly helpful for teams that rely heavily on Slack for communication.

Limitations

- Lack of large local model support: Running tests on a cloud-hosted platform like Launchable may cause issues if your project relies on large local models. The absence of these models on the remote server can lead to unnecessary test failures. A workaround would be to upload the models to a cloud storage service or use smaller models for testing purposes, but it may not be ideal in all scenarios.

- Accuracy of test selection: While Launchable’s ML-based test selection is powerful, it may not be perfect in every situation. It’s possible that the selected tests may not cover all edge cases, especially when the percentage target is set to a lower value. It’s essential to consider the risks involved when choosing the target percentage and assess the coverage provided by the selected tests.

- Dependency on external service: Relying on Launchable as a cloud-hosted testing platform means that your testing process is dependent on an external service. Any issues or downtime with Launchable can potentially disrupt your CI/CD pipeline. It’s important to consider this dependency and have a backup plan if needed.

- Additional cost: While Launchable offers a free tier, using the platform for larger teams and more extensive test suites may require a paid plan. This additional cost should be factored into your project’s budget and decision-making process.

Resources

(Feel free to drop any comments or questions, or buy me a coffee!)

Boost Testing Efficiency with Launchable

https://realzza.github.io/blog/Boost-Testing-Efficiency-with-Launchable/